In all of the tables, higher numbers were preferred, so with pf, and RMSE it is preferable to lose.

The Wilcoxon tables need the header row repeated down the side to indicate the comparisons.

Wilcox Tables

Win-Lose Tables

Monday, June 24, 2013

Brian: what I do

MSCS Computer Science - June 2013 -> ???

Research: Data Reduction with Vasil on Public Health Data

Data-Quality

Projects: I am currently working on a project to test some of the current

"Data Cleaning Techniques" outlined below. First to see how many of

our current datasets contain such "problem data" then to see if injecting

"problem data" into existing datasets will actually have any meaningful effect. Some examples of "problem data" as defined here.

Features 1) Identical Features 2) Constant Features 3) Features with Missing Values 4) Features with Implausible Values Instances 1) Identical Cases 2) Inconsistant Cases 3) Cases with Missing Values 4) Cases with Conflicting Values 5) Cases with Implausible Values The current approach outlined in the paper above is to lump all of

the data falling into these categories together then throw them out

thus cleaning the data.

Fayola: Okay, here's the story

Prototype Learning

During my masters, I worked on prototype learning. Created the CLIFF algorithm and applied it to the forensic science domain as a forensic interpretation model.Prototype learning algorithms are designed to eliminated the drawbacks of the K-nearest neighbor algorithm:

- The high computation costs caused by the need for each test sample to find the distance between it and each training sample.

- The storage requirement is large since the entire dataset needs to be stored in memory.

- Outliers can negatively affect the accuracy of the classifier.

- The negative effect of data sets with non-separable and/or overlapping classes.

- The low tolerance to noise.

CLIFF takes a dataset and for each class ranks (power) each attribute sub-range using BORE. Multiply ranks of each row then select the most powerful rows of each class.

Privacy

Now I use CLIFF along with MORPH to privatized defect datasets. CLIFF removes the overlap of classes with instance reduction while MORPH moves remaining data to low density areas and avoids overlap.y = x ± (x − z) ∗ r

- x ∈ D, the original instance;

- z ∈ D the NUN of x;

- y the resulting MORPHed instance.

Erin : What I do

Biography:

Crowd Heuristics Algorithm Learning Kit (CHALK)

Goal: Develop a Virtual Screeing for DNA Aptamers leveraging insights from aptamer researchers

Algorithm: EMFP

Paper pending internal review

Further Research:

- 10 years industry experience as Software and Systems Engineer

- Masters Systems Engineering, University of Arizona, 2006

- Doctoral Student - Computer Engineering

- NSF WVEPSCOR STEM Fellow 2012

- NSF WV NanoSAFE Fellow 2013

- Unsupervised Learning

- Association Learning

- Biclustering

- Large Datasets where Attributes >= Instances

- Bioinformatics

Crowd Heuristics Algorithm Learning Kit (CHALK)

Goal: Develop a Virtual Screeing for DNA Aptamers leveraging insights from aptamer researchers

Algorithm: EMFP

- Combining Association Learning and Probabilistic Clustering into a Biclustering Framework

- Pattern-based, Soft Biclustering Algorithm for Binary Dbs

- High quality ( F, pd, prec), low error ( RMSE, pf ) statistically relevant biclusters

- Compared to BicBin, vanUitert08

Paper pending internal review

Further Research:

- Show that Prelic's Match Score is biased against statistically relevant small clusters in high noise databases. Prelic06

- Develop a streaming EMFP implementation

Vasil: What I do

Master's CS Computer Science student since 2011, expected to graduate on August 2013.

Research scope: Data Reduction. Condense large training data into succinct summaries. Enabling users to review and analyze raw data.

Algorithm: PEEKING2 - A tool for data carving.

Implementation:

Inference:

Experiments:

Applied data mining techniques to reduce the cost of data collection for a public health study conducted by WVU.

Research scope: Data Reduction. Condense large training data into succinct summaries. Enabling users to review and analyze raw data.

Algorithm: PEEKING2 - A tool for data carving.

Implementation:

- Feature Selection via Information Gain.

Prune irrelevant features, select 25% of features with highest Info Gain. - FASTMAP.

Project into the direction of the greatest variability, applying a PCA-like linear time projection. - Grid-clustering.

Recursively split clusters by the median of each projected dimension. - Centroid estimation.

Replace each data cluster with its centroid.

Inference:

- Instance base learning. (k=2 Nearest Neighbor)

Extrapolate between centroids to make predictions. - Contrast set rule learning.

Generated rules estimating the deltas btw. centroids.

Experiments:

- PEEKING2 applied on 10 defect data sets and 10 effort data sets from PROMISE.

- Large data reduction is being observed: 93% of original data is reduced.

- Little information is lost. In most of the cases, k=2 NN applied on condensed data performed as well or better as other state of the art algorithm applied on overall data.

Applied data mining techniques to reduce the cost of data collection for a public health study conducted by WVU.

- Applying Correlation-based feature selection, we could drastically reduced the number of features without significantly impacting the performance of Linear Regression.

- We have also observed some degree of stability across different geographical regions.

- Small samples of stores (20%) can be used to make prediction for the rest.

- Samples are selected to minimize the travel distance btw. stores

Sunday, June 23, 2013

Timm: What I do

For years I've been thinking that our learners are really a menu of options that we can mix and mash to achieve other goals.

Why Bother?

- Its good science

- Its fun

- I've become a little tired of the same dull old data mining in SE paper. Sure, we can get results from data miners on SE data sets. Ok. Next?

- Recent papers report that there’s little to be gained from such algorithm mining because the “improvements” found from this approach are marginal, at best:

- for example, for effort estimation and defect prediction,

- simpler data miners do just as well or better than more elaborate ones [Hall12], [Dejaeger12]

- And, there is tremendous variance in outputs when the same learners are applied to the same data by different people

And if it works, what would we expect?

- Simpler implementations.

- If we cut down to the essence, then the essence should be less than the confusion we cleared away.

- Faster implementations.

- Again, if we cut the crap, the rest should run faster.

- Scalable inference.

- Era of big data. Need scalability.

- More services:

- the parts should be mashable into new services, not currently offered.

What new services?

- Local learning

- Decision mining:

- aka weighted contrast set learning

- planning (what to do)

- learning decisions from now to better

- monitoring (what to avoid)

- learning decisions from now to worse

- Discussion mining

- Learn how a community's bias by what decisions they take, avoid, retake, undo

- Temporal reasoning.

- Anytime inference. Recognition of anomalies. Pinpointing which part of it all needs updating. Updating just that section.

- Privacy:

- Can do

- Quality assurance

- Addressing the issues of Shepperd et al. TSE 2013

- Transfer learning:

- keeping what can be kept, changing what needs to change as we move

- across organizations (cross-company learning)

- across time (dataset shit)

- MOEA :

- the distinction between traditional learning and optimization is bogus.

- Inference at the business level:

- ability to model business goals and tune the learners appropriately.

What business goals:

From Buse & Zimmermann: Information needs, 100+ managers, Microsoft

Under the hood (the current implementation):

Fast spectral learning (map to eigenvectors, cluster, reason from there)

- O(N) inference with Fastmap

- Defect data set (POI-3.). Green=less defective

- Cluster in 2D space (fast)

- Find neighboring clusters C1,C2 where scores(C1) > scores(C2)

- Learn contrast C1 to C2

Papers

- Learning to change projects, PROMISE'12 (preliminary)

- Peeking : submitted to ASE'13

Wednesday, June 19, 2013

Seminar: Mernik on Evolutionary Algorithms

Seminar notification, WVU, CSEE, AI lab

When: 1:30pm Mnday June 24

Where: ESB, room 1011 (map)

What: A Parameter Control Method of Evolutionary Algorithms Using Exploration and Exploitation Measures

Abstract: Exploration and exploitation are omnipresent terms in evolutionary computation community that have been broadly utilized to explain how evolutionary algorithms perform search. However, only recently exploration and exploitation measures were presented in a quantitative way enabling to measure amounts of exploration and exploitation. To move a step further, this talk introduces a parameter control approach that utilizes such measures as feedback to adaptively control evolution processes. With new exploration and exploitation measures, the evolution process generates relatively well results in terms of fitness when applying to a practical chemical engineering problem of fitting Sovova’s model.

Who: Marjan Mernik received the M.Sc. and Ph.D. degrees in computer science from the University of Maribor in 1994 and 1998, respectively. He is currently Professor of Computer Science at the University of Maribor, Slovenia. He is also Visiting Professor of Computer and Information Sciences at the University of Alabama at Birmingham, and at the University of Novi Sad, Faculty of Technical Sciences, Serbia. His research interests include programming languages, compilers, domain-specific (modeling) languages, grammar-based systems, grammatical inference, and evolutionary computations. He is a member of the IEEE, ACM and EAPLS.

Who: Marjan Mernik received the M.Sc. and Ph.D. degrees in computer science from the University of Maribor in 1994 and 1998, respectively. He is currently Professor of Computer Science at the University of Maribor, Slovenia. He is also Visiting Professor of Computer and Information Sciences at the University of Alabama at Birmingham, and at the University of Novi Sad, Faculty of Technical Sciences, Serbia. His research interests include programming languages, compilers, domain-specific (modeling) languages, grammar-based systems, grammatical inference, and evolutionary computations. He is a member of the IEEE, ACM and EAPLS.Tuesday, June 18, 2013

GUI Development With Tkinter

Absolute necessities:

Read and parse an input file composed of centroids.

Display a graph with the centroids.

Allow the end user to select two centroids and view the deltas between them.

Other concerns:

Quality of the UI (Ease of use, etc.)

Portability

(Concerning the GUI package used in development) How easy is install? Can the package do what is needed of it?

Between WxPython and Tkinter, Tkinter showed itself to be an exceedingly easy deployment.

Tkinter is not a complex or versatile package.

Not really important anyways -- graph display/centroid selection is all relatively simple, as is feeding data out to a user.

Development:

The package is extremely simplistic. I can read mouse input and place widgets such as buttons, text displays, "canvases", etc. with ease. The next goal is to build the program so as to display the graph using a canvas widget.

Another necessary program feature is reading file input. I have not yet worked on this, and will begin delving into this area by the end of the week. Concerns in this area are namely portability (due to a differing of file structure/naming archetectures)

Read and parse an input file composed of centroids.

Display a graph with the centroids.

Allow the end user to select two centroids and view the deltas between them.

Other concerns:

Quality of the UI (Ease of use, etc.)

Portability

(Concerning the GUI package used in development) How easy is install? Can the package do what is needed of it?

Between WxPython and Tkinter, Tkinter showed itself to be an exceedingly easy deployment.

Tkinter is not a complex or versatile package.

Not really important anyways -- graph display/centroid selection is all relatively simple, as is feeding data out to a user.

Development:

The package is extremely simplistic. I can read mouse input and place widgets such as buttons, text displays, "canvases", etc. with ease. The next goal is to build the program so as to display the graph using a canvas widget.

Another necessary program feature is reading file input. I have not yet worked on this, and will begin delving into this area by the end of the week. Concerns in this area are namely portability (due to a differing of file structure/naming archetectures)

How to enumerate solutions using Z3

http://stackoverflow.com/questions/11867611/z3py-checking-all-solutions-for-equation

1) Find first solution, record it

2) Rerun SAT blocking the previous solution, find one more solution, record it

3) Rerun SAT blocking the first 2 solutions, and so forth.

Strange that SAT has to be repeated all over again each time. This is why enumeration takes time.

Need to:

1) Read DIMACS model, and run the above routine.

2) Not all the solutions will be useful. What I need is feature-rich solutions.

Tuesday, June 4, 2013

recoding peeker2

diabetes (probability of positive for diabetes)

housing (normalized resale value 0..1, min..max)

poi -1.5*log(p(defects)

Such sweet code:

def Abcx(row,c,left,right):

a = dist(row,left)

b = dist(row,right)

x = (a**2 + c**2 - b**2)* 1.0 / (2*c + 1.0/The.inf)

y = (a**2 - x**2)**0.5

return Thing(x=x,y=y,row=row,a=a,b=b)

def quadrants(t,demo=False):

z = one(t.rows)

left = furthest(z)

right = furthest(left)

out = []

quad1(t,left,right,out,demo)

return out

def quad1(t,l,r,out,demo):

t.left = l

t.right = r

c = t.c = dist(l,r)

x,y = [],[]

for row in t.rows:

xy = Abcx(row,c,l,r)

if xy.a > t.c : return quad1(t,l,row,out,demo)

elif xy.b > t.c : return quad1(t,row,r,out,demo)

else:

x += [xy]

y += [xy]

x = sorted(x,key=lambda z:z.x)

y = sorted(x,key=lambda z:z.y)

hi = len(x)

stop = The.enough(hi)

print "stop>",stop

tile(x,y,0,hi,0,hi,out,stop,demo,2)

def tile(x,y,x0,x2,y0,y2,out,stop,demo,depth=0):

def tile1(x0,x2,y0,y2,out):

tmp = []

for one in x[x0:x2]:

for two in y[y0:y2]:

if one.row.row == two.row.row:

tmp += [one]

if demo:

print '--|'*depth,len(tmp)

if len(tmp) > stop:

tile(x,y,x0,x2,y0,y2,out,stop,demo,depth+1)

else:

out += [tmp]

x1 = x0 + (x2 - x0)/2 y1 = y0 + (y2 - y0)/2 tile1( x0,x1, y0,y1 ,out) tile1( x0,x1, y1,y2 ,out) tile1( x1,x2, y0,y1 ,out) tile1( x1,x2, y1,y2 ,out)

icse'13 report

9 days. 2 conferences. 3 workshops. 1 tutorial.

MSR

data science tutorial

Dapse (data analysis patterns)

RAISE (realizing AI synergies in SE)

MSR

- the paper on the msr'13 error went MUCH better than expected.

- http://storify.com/timmenzies/tim-menziesicse13

- http://www.slideshare.net/timmenzies/msr13-mistake

- one reported mistake gives you integrity. two, on the other hand...

- been thinking much more on best practices for data mining research. we're going to have to get to coding standards, code reviews, etc.

- see regency rules http://dapse.unbox.org/?regency

- lots of interest in learning from the history of msr

- More generally, might be an opportunity for papers on methodological guidance at mst

- let us all start committing to the repos +1 a day.

data science tutorial

- 25 attendees

- over half from industry

- ekrem reports that in the san fran start up culture, anyone with a little data mining skills goes far

CMSBSE (combining Modelling and Search-Based Software Engineering)

- abdel's workshop talk went well

- lots of talk talk talk

- lots of "pattern invention" (grey beards, stroking their chin)

- some "pattern discovery"

- e.g. commit graphs barbara russo https://pro.unibz.it/staff/brusso/Publications/icsews13dapse-id7-p-16144-preprint.pdf

- e.g. analysis patterns barbara russo https://pro.unibz.it/staff/brusso/Publications/icsews13dapse-id6-p-16144-preprint.pdf

- my new religon: data analysis patterns

- book #2 (sharing data and models) is now underway

- book #1 (data analysis patterns book) scuttled by dapse workshop. field currently too diverse to accept analysis patters

- abdel's talk went well. and, the ever present question, why didn't you use a theorem prover

- sonia (from wayne state)'s talk went well

RAISE (realizing AI synergies in SE)

Wednesday, May 1, 2013

Vargha-Delaney Effect Size

Hedge's effect size should not be used when your data isn't known to have a normal distribution, thus you would have to use a non-parametric effect size calculation, e.g. Vargha-Delaney. Check out the description and R code.

http://doofussoftware.blogspot.com/2012/07/measuring-effect-size-with-vargha.html

http://doofussoftware.blogspot.com/2012/07/measuring-effect-size-with-vargha.html

Wednesday, April 24, 2013

pom decision frequencies + html5

POM3 Results (Showing POM3A and POM3B):

10 even width bins between min and maxmin <= val < bin1

bin1 <= val < bin2

...

etc

HTML5

Popular Buzz Word of recent; very powerful (cool and fast) with Javascript + jquery + CSS3.(initially opened in 2011, updates in 2012, became a WC3 recommendation Dec 2012.)

- Some stuff that's new with HTML5: http://net.tutsplus.com/tutorials/html-css-techniques/25-html5-features-tips-and-techniques-you-must-know/

- HTML5 is the way to go today.

Tools:

http://craftyjs.com/ - a game library set of tools for javascript to make html5 games

(one of many, there's also game engines and interface-only designers not-for-free)

http://html5games.com/ - popular web site to submit your games and gain audiences/revenue

(there are several other sites, as well)

My Fun-box site & Test of HTML5

fun.unbox.org/testgameIBEA vs NSGAII for large feature models

10 runs for 2000sec each. pop=300, xover=0.05, mut=0.001, 5 obj

| IBEA With Rule Fixing | ||||||

| FM | Features | Rules | %correct | TimeToAnyC | TimeTo50C | TimeTo100C |

| toybox | 544 | 1020 | 40.4% | 28.4 | NA | NA |

| axTLS | 684 | 2155 | 99.6% | 45.2 | 187 | 224 |

| ecos | 1244 | 3146 | 100% | 93.2 | 115 | 223 |

| freebsd | 1396 | 62183 | 98% | 315 | 501 | NA |

| fiasco | 1638 | 5228 | 99.6% | 170 | 656 | 810 |

| uClinux | 1850 | 2468 | 38% | 75 | NA | NA |

| busybox | 6796 | 17836 | 1.98% | 1350 | NA | NA |

| NSGA-II With Rule Fixing | ||||||

| FM | Features | Rules | %correct | TimeToAnyC | TimeTo50C | TimeTo100C |

| toybox | 544 | 1020 | ||||

| axTLS | 684 | 2155 | ||||

| ecos | 1244 | 3146 | 0% | NA | NA | NA |

| freebsd | 1396 | 62183 | 0% | NA | NA | NA |

| fiasco | 1638 | 5228 | 2% | 61 | NA | NA |

| uClinux | 1850 | 2468 | 3.33% | 26 | NA | NA |

| busybox | 6796 | 17836 | 1.17% | 1110 | NA | NA |

Wednesday, April 17, 2013

DIMACS feature models

| IBEA With Rule Fixing | NSGA-II With Rule Fixing | |||||||||

| FM | Features | Rules | %correct | TimeToAnyC | TimeTo50C | TimeTo100C | %correct | TimeToAnyC | TimeTo50C | TimeTo100C |

| toybox | 544 | 1020 | 25% | 23.2 | NA | NA | 12.4% | |||

| axTLS | 684 | 2155 | 100% | 43.8 | 333 | NA | ||||

| ecos | 1244 | 3146 | 100% | 87.8 | 129 | NA | ||||

| freebsd | 1396 | 62183 | 98% | 377 | 717 | NA | ||||

| fiasco | 1638 | 5228 | 100% | 104 | 444 | NA | ||||

| uClinux | 1850 | 2468 | 32% | 47.8 | 229 | NA | ||||

| busybox | 6796 | 17836 | 18% | 1190 | NA | NA | ||||

Wednesday, April 10, 2013

GALE + PCG

Updates to GALE:

What if while recursing in RRSL clustering method, we only go down 1 level (split only a single time)? Previously, we were going down 4 levels (because rstop = square root(mu=100)).

NOTE: We tested below on a really small sample for quick results, using rho=0.005. That means we were running GALE for a -long- time, and still get results under <30 numEval. With rho=0.10, the numEval would be ~ 4-5.

Introducing SBSE into game design in two ways:

1) "Outer Loop"

decisions = {all the features used to build the game} *these are categories and non-numeric*

evaluate = the player plays the game, and rates it 0-5 stars across categories

objectives = the player rating (aesthetic, story, gameplay, replay, overall)

A designer builds the game (a supply of the decisions). Then, a player plays it (evaluates it across objectives). The evaluation is human feedback, which is subject to human error and bias. It takes a long time for a player to play the game. It takes a long time to get the feedback. And the feedback may not even be correct. SBSE can be theoretically used, but it would take decades.

GALE can speed this up, tremendously. But there's still the risk of human error in the feedback. The method of evaluation is subjective here. And the decisions may not be easily modelled at all.

2) "Inner Loop"

There are many "inner loops" that complete the single "outer loop" for the whole game.

decisions = {parameters that define one feature of the game} *introducing PCG*

evaluate = the player plays the game, and is scored on his/her performance w/ respect to that feature

objectives = the player score (points, accuracy%, evade%, time taken, etc)

A designer builds the game. The game supplies the decisions to a PCG module in the game; i.e. the generation of random AI (speed, strength, defense) enemies. The player plays the stage in the game for which these enemies are met. After completion of the stage (or failure to), the player is graded across objectives: for example, points, accuracy of hitting the enemies, evasion rate of avoiding enemies, time taken before death or completion, etc.

The inner loop provides SBSE a way to search on the fly while the gamer is playing. It avoids the issue of human bias/error. A single stage can be rapid, and there is no overhead of feedback. The game attunes itself according the players that play it, and searches for optimal PCG that can provide entertainment on the assumption that when players score well, they are having fun. With GALE, a user only needs to play ~2-8 games before seeing decent results, and after 20 results, the optimality should be superb.

What if while recursing in RRSL clustering method, we only go down 1 level (split only a single time)? Previously, we were going down 4 levels (because rstop = square root(mu=100)).

NOTE: We tested below on a really small sample for quick results, using rho=0.005. That means we were running GALE for a -long- time, and still get results under <30 numEval. With rho=0.10, the numEval would be ~ 4-5.

Introducing SBSE into game design in two ways:

1) "Outer Loop"

decisions = {all the features used to build the game} *these are categories and non-numeric*

evaluate = the player plays the game, and rates it 0-5 stars across categories

objectives = the player rating (aesthetic, story, gameplay, replay, overall)

A designer builds the game (a supply of the decisions). Then, a player plays it (evaluates it across objectives). The evaluation is human feedback, which is subject to human error and bias. It takes a long time for a player to play the game. It takes a long time to get the feedback. And the feedback may not even be correct. SBSE can be theoretically used, but it would take decades.

GALE can speed this up, tremendously. But there's still the risk of human error in the feedback. The method of evaluation is subjective here. And the decisions may not be easily modelled at all.

2) "Inner Loop"

There are many "inner loops" that complete the single "outer loop" for the whole game.

decisions = {parameters that define one feature of the game} *introducing PCG*

evaluate = the player plays the game, and is scored on his/her performance w/ respect to that feature

objectives = the player score (points, accuracy%, evade%, time taken, etc)

A designer builds the game. The game supplies the decisions to a PCG module in the game; i.e. the generation of random AI (speed, strength, defense) enemies. The player plays the stage in the game for which these enemies are met. After completion of the stage (or failure to), the player is graded across objectives: for example, points, accuracy of hitting the enemies, evasion rate of avoiding enemies, time taken before death or completion, etc.

The inner loop provides SBSE a way to search on the fly while the gamer is playing. It avoids the issue of human bias/error. A single stage can be rapid, and there is no overhead of feedback. The game attunes itself according the players that play it, and searches for optimal PCG that can provide entertainment on the assumption that when players score well, they are having fun. With GALE, a user only needs to play ~2-8 games before seeing decent results, and after 20 results, the optimality should be superb.

Tuesday, April 9, 2013

Wednesday, March 20, 2013

EMFP Alg, early stop implemented

My algorithm now stops early if FP Growth finds the same set of in two sequential runs.

This likely means that the attributes do not form statistically significant clusters and that there are likely no more attribute sets that will be stat. sig. because FP Growth finds the most common column sets first.

It is currently running with a cutoff of a 95% Binomial CI, I can lower the CI percentage as Dr. Menzies suggested.

Below is the latest printout demonstrating the early exit.

time ./trial.sh main synthDb_M100_N500_m5_n10_p0.050000_num1-1.arff

***Initial Values***

confidence levels: 0.99 0.95 0.90 0.85 0.80

min perc levels (rows): 0.05 0.04 0.03 0.02 0.01

min cols: 2

FPGrowth Step level: .01

FPGrowth Upper limit: 0.5

---------------------------------------

number of times in loop= 1

Confidence Perc = 0.99

Min Rules Perc= 0.05

dvAvg dims=500

dbAvg rows=100

running FPGrowth

500,Number of Cols from FPGrowth= 9

in idCentroids file 3 cluster1 cluster2 cluster3 numArffs= 0

End Clause

#Cols

#Cols

#Cols

#Cols

#Cols

#Cols

numArffs= 1

---------------------------------------

number of times in loop= 2

Confidence Perc = 0.99

Min Rules Perc= 0.05

dvAvg dims=500

dbAvg rows=100

running FPGrowth

500,Number of Cols from FPGrowth= 9

NumDiff 6

in idCentroids file 3 cluster1 cluster3 cluster4 numArffs= 1

End Clause

#Cols

#Cols

#Cols

#Cols

#Cols

#Cols

numArffs= 2

---------------------------------------

number of times in loop= 3

Confidence Perc = 0.99

Min Rules Perc= 0.05

dvAvg dims=500

dbAvg rows=100

running FPGrowth

500,Number of Cols from FPGrowth= 9

NumDiff 0

FP Same Cols-break

real 0m19.057s

user 0m15.725s

sys 0m1.425s

This likely means that the attributes do not form statistically significant clusters and that there are likely no more attribute sets that will be stat. sig. because FP Growth finds the most common column sets first.

It is currently running with a cutoff of a 95% Binomial CI, I can lower the CI percentage as Dr. Menzies suggested.

Below is the latest printout demonstrating the early exit.

time ./trial.sh main synthDb_M100_N500_m5_n10_p0.050000_num1-1.arff

***Initial Values***

confidence levels: 0.99 0.95 0.90 0.85 0.80

min perc levels (rows): 0.05 0.04 0.03 0.02 0.01

min cols: 2

FPGrowth Step level: .01

FPGrowth Upper limit: 0.5

---------------------------------------

number of times in loop= 1

Confidence Perc = 0.99

Min Rules Perc= 0.05

dvAvg dims=500

dbAvg rows=100

running FPGrowth

500,Number of Cols from FPGrowth= 9

in idCentroids file 3 cluster1 cluster2 cluster3 numArffs= 0

End Clause

#Cols

#Cols

#Cols

#Cols

#Cols

#Cols

numArffs= 1

---------------------------------------

number of times in loop= 2

Confidence Perc = 0.99

Min Rules Perc= 0.05

dvAvg dims=500

dbAvg rows=100

running FPGrowth

500,Number of Cols from FPGrowth= 9

NumDiff 6

in idCentroids file 3 cluster1 cluster3 cluster4 numArffs= 1

End Clause

#Cols

#Cols

#Cols

#Cols

#Cols

#Cols

numArffs= 2

---------------------------------------

number of times in loop= 3

Confidence Perc = 0.99

Min Rules Perc= 0.05

dvAvg dims=500

dbAvg rows=100

running FPGrowth

500,Number of Cols from FPGrowth= 9

NumDiff 0

FP Same Cols-break

real 0m19.057s

user 0m15.725s

sys 0m1.425s

Playing with DIMACS feature models -- updated 4/10/2013

Running IBEA for 1 hour over the available DIMACS feature models

Parameters: Population = 300, constrained mutation at rate = 0.001, NO crossover.

Technique: Before evolution, TWO rounds of rule checking are made:

1) First round: all features standing by themselves in a line are fixed: some are selected (mandatory), and some are deselected ("prohibited"!!!)

2) Second round: all features that share a line with "fixed features" from first round, they become fixed as well.

Mutation is constrained so that it doesn't mess with the fixed features.

1) Verify how many features are fixed in the first and second rounds of rule checking. Do we benefit by making further rounds? Done. No benefit expected from further rounds.

2) The first 7 feature models are "easy"... why? Do they have a lot of "fixed" features? or just because they're smaller? Done. ecos and freebsd have very few "fixed" features, yet they are solved easily... Size is a factor that is part of the larger notion of "hardness" or "complexity".

3) These 7 can be used in experiments to compare IBEA with others (NSGAII etc)... We already know others will suck. This could be our "scale-up" verification. No.

4) We could also compare this "rule detection" method with complete randomness (ICSE'13 style) but we've already learned not to rely on complete randomness. No.

5) We could try to optimize two objectives only, then plug the result into 5-objective optimization... This can save a lot of wasted "number crunching". We could run 2-objective and identify the rules that are most frequently violated, make recommendations, take feedback, fix a bunch of features... run again.. sound familiar?

Parameters: Population = 300, constrained mutation at rate = 0.001, NO crossover.

Technique: Before evolution, TWO rounds of rule checking are made:

1) First round: all features standing by themselves in a line are fixed: some are selected (mandatory), and some are deselected ("prohibited"!!!)

2) Second round: all features that share a line with "fixed features" from first round, they become fixed as well.

Mutation is constrained so that it doesn't mess with the fixed features.

| Skipped features | Skipped Rules | |||||||||

| FM | Features | Rules | %correct | Round 1 | Total | Total | ||||

| toybox | 544 | 1020 | 100% | 361 | 66% | 363 | 67% | 394 | 39% | |

| axTLS | 684 | 2155 | 100% | 382 | 56% | 384 | 56% | 259 | 12% | |

| ecos | 1244 | 3146 | 100% | 10 | 1% | 19 | 2% | 11 | 0% | |

| freebsd | 1396 | 62183 | 100% | 3 | 0% | 3 | 0% | 20 | 0% | |

| fiasco | 1638 | 5228 | 100% | 995 | 61% | 995 | 61% | 553 | 11% | |

| uClinux | 1850 | 2468 | 100% | 1234 | 67% | 1244 | 67% | 1850 | 75% | |

| busybox | 6796 | 17836 | 100% | 3947 | 58% | 3949 | 58% | 2644 | 15% | |

| uClinuxconfig | 11254 | 31637 | 4% | 6025 | 54% | 6027 | 54% | 4641 | 15% | |

| coreboot | 12268 | 47091 | 1% | 4592 | 37% | 4672 | 38% | 2060 | 4% | |

| buildroot | 14910 | 45603 | 0% | 6755 | 45% | 6759 | 45% | 3534 | 8% | |

| embtoolkit | 23516 | 180511 | 0% | 6370 | 27% | 6619 | 28% | 657 | 0% | |

| freetz | 31012 | 102705 | 0% | 14444 | 47% | 14493 | 47% | 3911 | 4% | |

| Linux 2.6.32 | 60072 | 268223 | 0% | 32329 | 54% | 32479 | 54% | 18734 | 7% | |

| Linux 2.6.33 | 62482 | 273799 | 0% | 33597 | 54% | 33766 | 54% | 19394 | 7% | |

| Linux 2.6.28.6 | 6888 | 343944 | 0% | |||||||

1) Verify how many features are fixed in the first and second rounds of rule checking. Do we benefit by making further rounds? Done. No benefit expected from further rounds.

2) The first 7 feature models are "easy"... why? Do they have a lot of "fixed" features? or just because they're smaller? Done. ecos and freebsd have very few "fixed" features, yet they are solved easily... Size is a factor that is part of the larger notion of "hardness" or "complexity".

5) We could try to optimize two objectives only, then plug the result into 5-objective optimization... This can save a lot of wasted "number crunching". We could run 2-objective and identify the rules that are most frequently violated, make recommendations, take feedback, fix a bunch of features... run again.. sound familiar?

Wednesday, March 13, 2013

Sticking points in TSE paper

1-

We’re actually expanding two

papers: ICSE and CMSBSE.

2-

In ICSE we fix the number

of fitness evaluations. In CMSBSE (and TSE) we fix the run time… A reviewer

complained about this in CMSBSE…

3-

Statistical analysis needed

in addition to effect size.

Tuesday, March 12, 2013

Wednesday, March 6, 2013

SBSE MOO in Few Evals: GALE

GALE = Geometric Active Learning Evolution

Hypervolume versus IBD and IBS

- IBD = how good we are

- IBS = how spread out we are

- Both based on IBEA indicator

- RRSL should be GALE, here

Analysis Notes:

- RRSL wins badly on NumEval

- NSGA-II outperforms RRSL in runtime for small MOPs that are cheap to evaluate. But when models become expensive (i.e. POM2), NSGA-II becomes slow and RRSL wins in runtime

- RRSL is a very decision-sided routine. For ZDT1 (30 decisions) and osyzcka2( 5 decisions ), the runtime of RRSL is punished

Paper "3/4 Draft":

- nothing in here, yet: http://unbox.org/things/var/joe/active/

- promise to be integrated in linux/latex fully by full draft time

- https://www.dropbox.com/s/y9ey0hr6agsolzt/solving_problems_in_few_evaluations.pdf?m

Hypervolume versus IBD and IBS

- IBD = how good we are

- IBS = how spread out we are

- Both based on IBEA indicator

More Results:

- added some more flavors just so we have one of every cookie

Notes:

- Left table shows the Raw Averages for everyone over 20 repeats

- Right table uses t-statistic to generate confidence interval (99%)

- 7-3-2 on wins-losses-ties when only considering IBD (quality)

- WIN or LOSS = no overlap of confidence interval- RRSL should be GALE, here

Analysis Notes:

- RRSL wins badly on NumEval

- NSGA-II outperforms RRSL in runtime for small MOPs that are cheap to evaluate. But when models become expensive (i.e. POM2), NSGA-II becomes slow and RRSL wins in runtime

- RRSL is a very decision-sided routine. For ZDT1 (30 decisions) and osyzcka2( 5 decisions ), the runtime of RRSL is punished

Paper "3/4 Draft":

- nothing in here, yet: http://unbox.org/things/var/joe/active/

- promise to be integrated in linux/latex fully by full draft time

- https://www.dropbox.com/s/y9ey0hr6agsolzt/solving_problems_in_few_evaluations.pdf?m

Wednesday, February 27, 2013

Not Global vs Local

I'm playing with the idea of a global and local rules family, where local rules are the children and global rules the grandparents. The parents are the middle rules. In other words, the local rules are subsets of global and middle rules.

The goal - provide the project manager with a family of options. If a local rule is best but would prefer a more global solution, find its global and middle relatives and see if they work just as well.

My initial experiment is a little flawed by my simple rule score metric, P(defects), but results are useful.

Wednesday, February 20, 2013

TSE paper outline

1- The ICSE'13 result: All algorithms perform almost the same at 2 or 3 objectives. IBEA beats all by a large margin when optimizing 4 or 5 objectives.

2- The CMSBSE'13 result: Lower rates are better. This is a brief section, IBEA vs NSGAII vs SPEA2, E-Shop only, high rates vs low rates. It can be extended to other algorithms and feature models, but then it's dwarfed by the tree mutation results.

3- The comparison of algorithms is run for 20 feature models, with effect size calculations... Confirms superiority of IBEA. Lower rates are used here to give all algorithms their best chance to perform.

4- Tree Mutation: By removing random crossover and mutation and deploying tree mutation [at a low rate 0.01], IBEA performs better (converges faster) than 1 & 2 above.

4-a) The improvement is 3-orders-of-magnitude when comparing E-Shop with ICSE'13 setup vs Tree Mutation. In fact, we now have good results at 2 sec and very good at 10 sec, thus interactive responses are possible. [Comparison: With ICSE'13 IBEA, We achieve HV=0.28 & 52% correct solutions after 3 hours. With Tree Mutation IBEA, We achieve HV=0.25 & 99% correct solutions after 8 seconds. The 0.28 figure is inflated by incorrect solutions that achieve supreme objective values... 3hours/8sec = 1350].

4-b) The improvement can also be demonstrated on all 20 feature models, only the numbers are less than 3-orders-of-magnitude... [This needs to be repeated because of the bug we've just discovered].

5- Tree Mutation does not help other algorithms much... This can be demonstrated on all 20 models. [This needs to be repeated because of the bug we've just discovered]. Should this be included? If we don't include it here, is it worthy of a whole new paper?

5-a) Comparison of 6 algorithms with and without Tree Mutation. found at

http://www.unbox.org/things/var/abdel/13/tse/Comparison of Methods Feb-Mar 2013.pdf

5-a) Comparison of 6 algorithms with and without Tree Mutation. found at

http://www.unbox.org/things/var/abdel/13/tse/Comparison of Methods Feb-Mar 2013.pdf

Biclustering Comparisons

BicBin comparisons VanUitert08

Comparison using Artificial Db

p {.001:.05} # prob of 1s

M = 10, 100, 1000 # rows

N = 50, 500, 5000 # cols

m x n = {2:10:50} # size of biclusters

create 5 dbs for each combination

run each algorithm 50 X per alg

average the fp rates for each combination

results shown in heat maps

Real Dbs

TRANSFAC v 10.3 Wingender00

transaction factor binding site data

STRING vonMering07

protein to protein interactions

GO categories Prelic06

measuring distances between the motifs found by these dbs

don't understand all of this group yet

Wednesday, February 13, 2013

Over the horizon: ML + SBSE = What?

here's the talk i gave at UCL last week

so come see what i say about you behind your back (sorry, only could get in references to ekrem, joe krall, fayola into this talk- but clearly vasil's work fits this framework as well)

Wednesday, February 6, 2013

A12measure

The standard story:

Many problems with the standard story, especially when comparing (say) 20 different configurations of a learner.

- Say each test has confidence of 0.95

- Net test has confidence of 0.95^20 = 0.36

- The complexity of the test makes me lose confidence in my ability to understand the test

- For the parametric tests, too many parametric assumptions.

- The blurred telescope effect (Friedman-Nemenyi)

A12

The Vargha and Delaney A12 statistics is a non-parametric effect size measure.Given a performance measure M seen in m measures of X and n measures of Y, the A12 statistics measures the probability that running algorithm X yields higher M values than running another algorithm Y.

A12 = #(X > Y)/mn + 0.5*#(X=Y)/mn

- A. Vargha and H. D. Delaney. "A critique and improvement of the CLcommon language effect size statistics of McGraw and Wong". Journal of Educational and Behavioral Statistics, 25(2):101-132, 2000.

rA12

A12 studies two treatments. rA12 handles mulitple treatments using a Scott Knott procedure; i.e. divide a list of treatments into two lists L1 and L2 _ by finding the division that maximizes the expected value of the sum of square of the mean differences before and after divisions; i.e.

L = union(L1,L2)

|L1| * abs(L1.mu - L.mu)^2 + |L2| * abs(L2.mu - L.mu)^2

rA12 calles itself recursively on the lsts terminating when no further useful division can be found (and useful is checked by the A12 procedure.

For more on the Scott Knott procedure (where ANOVA is used to define useful), see

- N. Mittas and L. Angelis, "Ranking and clustering software cost estimation models through a multiple comparisons algorithm" IEEE Transactions on Software Engineering (pre-print), 2012.

Source code

active learning for multi-objective optimization

- same orders of magnitude as joe

- technique seems a little more complex

- no execution on standard models (i think)

Marcela Zuluaga, Andreas Krause, Guillaume Sergent and Markus Püschel (to appear in Proc. International Conference on Machine Learning (ICML), 2013)

Active Learning for Multi-Objective Optimization

Active Learning for Multi-Objective Optimization

In many fields one encounters the challenge of identifying, out of a pool of possible designs, those that simultaneously optimize multiple objectives. This means that usually there is not one optimal design but an entire set of Pareto-optimal ones with optimal trade-offs in the objectives. In many applications, evaluating one design is expensive; thus, an exhaustive search for the Pareto-optimal set is unfeasible. To address this challenge, we propose the Pareto Active Learning (PAL) algorithm which intelligently samples the design space to predict the Pareto-optimal set. Key features of PAL include (1) modeling the objectives as samples from a Gaussian process distribution to capture structure and accomodate noisy evaluation; (so parametric assumptions) (2) a method to carefully choose the next design to evaluate to maximize progress; and (3) the ability to control prediction accuracy and sampling cost. We provide theoretical bounds on PAL’s sampling cost required to achieve a desired accuracy. Further, we show an experimental evaluation on three real-world data sets (only 3???). The results show PAL’s effectiveness; in particular it improves significantly over a state-of-the-art multi-objective optimization method, saving in many cases about 33% evaluations to achieve the same accuracy.

Download: http://goo.gl/68ZGY

Wednesday 2-6-2013

Linux early results http://www.unbox.org/things/var/abdel/13/linux/

CMSBSE'13 http://www.unbox.org/things/var/abdel/13/cmsbse13/

RAISE'13 http://www.unbox.org/things/var/abdel/13/raise13/

CMSBSE'13 http://www.unbox.org/things/var/abdel/13/cmsbse13/

RAISE'13 http://www.unbox.org/things/var/abdel/13/raise13/

Wednesday, January 23, 2013

Nate Silver: The Numbers Don't Lie

http://www.youtube.com/watch?v=GuAZtOJqFr0

A 56-minute talk about the signal and the noise by Nate Silver

A 56-minute talk about the signal and the noise by Nate Silver

Wednesday, January 16, 2013

RRSL Report

JMOO

1) We have a system for very easily instancing new problems, and have already implemented most baselines such as Fonseca, Srinivas, ConstrEx and more. Capabilities can handle constrained or non-constrained problems.

Modifiable Genetic Algorithm Approach

2) A basis for a very plain and dull GA is as follows:

- Read a 100-row dataset for the problem (so that we begin on a consistent standpoint).

- Evaluate all of these as the initial population and record Median & IQR metrics for each objective.

- Begin an iterative process, stopping at a given criteria (# of runs, or %change between iterations):

- - - a: Select offspring for CM

- - - b: Crossover for the offspring

- - - c: Mutation for the offspring

- - - d: Combine population with the new offspring and select a new population from these

RRSL GA

3) Different Algorithms follow from adjusting (a,b,c,d) above:

- RRSL GA:

- - - a: Selection of offspring via rrsl non-dominating leaves. Maintain two copies of these offspring.

- - - b: Crossover of the offspring via moving averages idea

- - - c: Mutation for the offspring via Differential Evolution

- - - d: Tournament Selection on the (few) offspring + adjustedoffspring

NSGA2/SPEA2

4) Benchmark Algorithms also follow from adjusting (a,b,c,d) above:

- NSGA2/SPEA2:

- - - a: Selection of offspring using k-round tournament selection

- - - b: Standard Crossover to swap attributes for a given pair of individuals

- - - c: Standard Mutation to re-roll the dice on some individual's attribute chosen decision

- - - d: Selection via NSGA2 or SPEA2 sub-routines.

Goals

5) The Goal of the Research:

- We want RRSL GA to perform as well as NSGA2/SPEA2.

- There's an argument on what it means to be better. Solutions lie across the optimal pareto frontier, but different algorithms may discover solutions at different areas along the frontier.

- The absolute emphasis of the research: Improving upon the number of objective function evaluations. The theory is that RRSL GA will require log N the number of evaluations needed by NSGA2/SPEA, and still do just as well.

Results

6) Results

charts: http://unbox.org/stuff/var/joe/joe/fall2012/pom2rrslTesting/charts/1-16-2013/

--- full results pending ---

1) We have a system for very easily instancing new problems, and have already implemented most baselines such as Fonseca, Srinivas, ConstrEx and more. Capabilities can handle constrained or non-constrained problems.

Modifiable Genetic Algorithm Approach

2) A basis for a very plain and dull GA is as follows:

- Read a 100-row dataset for the problem (so that we begin on a consistent standpoint).

- Evaluate all of these as the initial population and record Median & IQR metrics for each objective.

- Begin an iterative process, stopping at a given criteria (# of runs, or %change between iterations):

- - - a: Select offspring for CM

- - - b: Crossover for the offspring

- - - c: Mutation for the offspring

- - - d: Combine population with the new offspring and select a new population from these

RRSL GA

3) Different Algorithms follow from adjusting (a,b,c,d) above:

- RRSL GA:

- - - a: Selection of offspring via rrsl non-dominating leaves. Maintain two copies of these offspring.

- - - b: Crossover of the offspring via moving averages idea

- - - c: Mutation for the offspring via Differential Evolution

- - - d: Tournament Selection on the (few) offspring + adjustedoffspring

NSGA2/SPEA2

4) Benchmark Algorithms also follow from adjusting (a,b,c,d) above:

- NSGA2/SPEA2:

- - - a: Selection of offspring using k-round tournament selection

- - - b: Standard Crossover to swap attributes for a given pair of individuals

- - - c: Standard Mutation to re-roll the dice on some individual's attribute chosen decision

- - - d: Selection via NSGA2 or SPEA2 sub-routines.

Goals

5) The Goal of the Research:

- We want RRSL GA to perform as well as NSGA2/SPEA2.

- There's an argument on what it means to be better. Solutions lie across the optimal pareto frontier, but different algorithms may discover solutions at different areas along the frontier.

- The absolute emphasis of the research: Improving upon the number of objective function evaluations. The theory is that RRSL GA will require log N the number of evaluations needed by NSGA2/SPEA, and still do just as well.

Results

6) Results

charts: http://unbox.org/stuff/var/joe/joe/fall2012/pom2rrslTesting/charts/1-16-2013/

--- full results pending ---

Wednesday, January 9, 2013

Erin's update

Her is the current pseudocode and code for my alg. BicBin is the alg that I will run comparisons against.

PSEUDOCODE

loop over (confidence levels, or coverage)

# ATTRIBUTE SELECTION

run FP Growth

extract unique 4-mers from results

compile the list of selected attribute numbers

derive unselected attribute numbers

# CLUSTERING

EM cluster db using selected attributes

label rows by the clusters

parse results

# CLASSIFIED TESTING

divide db into 10 bins

run nb, JRip, oner, prism, ZeroR, j48 using cluster labels as the class

keep clusters that are greater than the db average (effect size),

AND pd, pf, calc you gave me today in classification

record the cluster patterns, and rows and columns

change 1s in the clusters to 0s (non overlapping biclusters)

end loop

? do I recalculate the db average or use inital avg

? classification performance criteria

CODE (thus far)

########################

#!/bin/bash

Jar="/usr/share/java/weka.jar"

Weka="nice -n 20 java -Xmx2048M -cp $Jar "

Input="/tiny/data/DNA/WordRules/secondTry/ell4Words.arff"

CONFIDENCE=0.99

STEP=0.05

MinRulesPerc=.60

UPPER=1.0

InvAttSet=""

DbAvg=0

#COPY THE INPUT DATA

cp $Input /tiny/data/trialInput.arff

Input="/tiny/data/trialInput.arff"

cat $Input | ./dbAvg.awk

#GET THE DB AVERAGE

#DbAvg=`cat $Input | ./dbAvg.awk`

#echo "DbAvg" $DbAvg

#LOOP OVER CONFIDENCE LEVELS or MinRulesPerc(aka coverage)

#for ((CONFIDENCE=99;CONFIDENCE==80;CONFIDENCE=CONFIDENCE-5)); do

#GET ATTRIBUTES FROM FP GROWTH

#run FPGrowth

$Weka weka.associations.FPGrowth -P 1 -I -1 -N 20 -T 0 -C $CONFIDENCE -D $STEP -U $UPPER -M $MinRulesPerc -t $Input > fpResults$MinRulesPerc$CONFIDENCE.txt

#extract unique 4-mers from FP

cat fpResults$MinRulesPerc$CONFIDENCE.txt |

sed 's/_binarized/ /g' |

sed -e 's/=1/ /g' -e 's/=0/ /g' |

./fpFieldParser.awk > attA$MinRulesPerc$CONFIDENCE.txt

#compile list of attribute numbers

cat fieldsNum.txt separator.txt attA$MinRulesPerc$CONFIDENCE.txt |

./makeFieldNumList.awk > fieldListA$MinRulesPerc$CONFIDENCE.txt

#remove last comma

sed 's/.\{1\}$//' attIndicies.txt > fldListA$MinRulesPerc$CONFIDENCE.txt

sed 's/.\{1\}$//' inverseAttIndicies.txt > fldListIA$MinRulesPerc$CONFIDENCE.txt

#put list into variable

InvAttSet=`cat fldListIA$MinRulesPerc$CONFIDENCE.txt`

echo $InvAttSet

#cluster all instances on the reduced attribute list

$Weka weka.filters.unsupervised.attribute.AddCluster -W "weka.clusterers.EM -t $Input -I 100 -N -1 -M 1.0E-6 -S 100" -I $InvAttSet

#keep clusters that are 30% more than the db average

#AND Test well 70+

#done

####

used 'time' and got these numbers

real 0m33.106s

user 0m38.313s

sys 0m0.890s

PSEUDOCODE

loop over (confidence levels, or coverage)

# ATTRIBUTE SELECTION

run FP Growth

extract unique 4-mers from results

compile the list of selected attribute numbers

derive unselected attribute numbers

# CLUSTERING

EM cluster db using selected attributes

label rows by the clusters

parse results

# CLASSIFIED TESTING

divide db into 10 bins

run nb, JRip, oner, prism, ZeroR, j48 using cluster labels as the class

keep clusters that are greater than the db average (effect size),

AND pd, pf, calc you gave me today in classification

record the cluster patterns, and rows and columns

change 1s in the clusters to 0s (non overlapping biclusters)

end loop

? do I recalculate the db average or use inital avg

? classification performance criteria

CODE (thus far)

########################

#!/bin/bash

Jar="/usr/share/java/weka.jar"

Weka="nice -n 20 java -Xmx2048M -cp $Jar "

Input="/tiny/data/DNA/WordRules/secondTry/ell4Words.arff"

CONFIDENCE=0.99

STEP=0.05

MinRulesPerc=.60

UPPER=1.0

InvAttSet=""

DbAvg=0

#COPY THE INPUT DATA

cp $Input /tiny/data/trialInput.arff

Input="/tiny/data/trialInput.arff"

cat $Input | ./dbAvg.awk

#GET THE DB AVERAGE

#DbAvg=`cat $Input | ./dbAvg.awk`

#echo "DbAvg" $DbAvg

#LOOP OVER CONFIDENCE LEVELS or MinRulesPerc(aka coverage)

#for ((CONFIDENCE=99;CONFIDENCE==80;CONFIDENCE=CONFIDENCE-5)); do

#GET ATTRIBUTES FROM FP GROWTH

#run FPGrowth

$Weka weka.associations.FPGrowth -P 1 -I -1 -N 20 -T 0 -C $CONFIDENCE -D $STEP -U $UPPER -M $MinRulesPerc -t $Input > fpResults$MinRulesPerc$CONFIDENCE.txt

#extract unique 4-mers from FP

cat fpResults$MinRulesPerc$CONFIDENCE.txt |

sed 's/_binarized/ /g' |

sed -e 's/=1/ /g' -e 's/=0/ /g' |

./fpFieldParser.awk > attA$MinRulesPerc$CONFIDENCE.txt

#compile list of attribute numbers

cat fieldsNum.txt separator.txt attA$MinRulesPerc$CONFIDENCE.txt |

./makeFieldNumList.awk > fieldListA$MinRulesPerc$CONFIDENCE.txt

#remove last comma

sed 's/.\{1\}$//' attIndicies.txt > fldListA$MinRulesPerc$CONFIDENCE.txt

sed 's/.\{1\}$//' inverseAttIndicies.txt > fldListIA$MinRulesPerc$CONFIDENCE.txt

#put list into variable

InvAttSet=`cat fldListIA$MinRulesPerc$CONFIDENCE.txt`

echo $InvAttSet

#cluster all instances on the reduced attribute list

$Weka weka.filters.unsupervised.attribute.AddCluster -W "weka.clusterers.EM -t $Input -I 100 -N -1 -M 1.0E-6 -S 100" -I $InvAttSet

#keep clusters that are 30% more than the db average

#AND Test well 70+

#done

####

used 'time' and got these numbers

real 0m33.106s

user 0m38.313s

sys 0m0.890s

Vasil - Progress Report

1. From the previous meeting, I showed that we can approximate the NEMS score results by training on 10% of the population.

However, by clustering (cluster+LR and cluster+Centroid) we did not get better results than by applying linear regression on the entire instances space.

I tried a new method of grid clustering. Instead of using Recursive FastMap, the projected instance space was split into a specific number of equally-sized quadrants, then the quadrants were clustered using the variance filter.

I used different number of quadrants to split the space, but still clustering could not outperform the linear regression on the entire space.

2. Next I have shifted by attention on applying the clustering methods on defect prediction data. (I am currently using the ant data set from promise repository).

- The instance space is projected using FastMap.

- Then the instance space is recursively split into quadrants on the median of x and y dimensions.

- The clusters are created using Entropy to combine quadrants (i.e. a quadrant is not added if the entropy is increased).

ToDo:

- Apply Naive Bayes on the entire space and on clusters. (The implementation will be finished today and I can report the results).

- Use information gain to define the attributes that contribute on clustering.

Vasil

However, by clustering (cluster+LR and cluster+Centroid) we did not get better results than by applying linear regression on the entire instances space.

I tried a new method of grid clustering. Instead of using Recursive FastMap, the projected instance space was split into a specific number of equally-sized quadrants, then the quadrants were clustered using the variance filter.

I used different number of quadrants to split the space, but still clustering could not outperform the linear regression on the entire space.

2. Next I have shifted by attention on applying the clustering methods on defect prediction data. (I am currently using the ant data set from promise repository).

- The instance space is projected using FastMap.

- Then the instance space is recursively split into quadrants on the median of x and y dimensions.

- The clusters are created using Entropy to combine quadrants (i.e. a quadrant is not added if the entropy is increased).

ToDo:

- Apply Naive Bayes on the entire space and on clusters. (The implementation will be finished today and I can report the results).

- Use information gain to define the attributes that contribute on clustering.

Vasil

Tuesday, December 11, 2012

Kernel Mean Match (KMM) for cross-project defect prediction

Context: cross-project defect prediction

Algorithm: Kernel Mean Match

Algorithm: Kernel Mean Match

where B limits the scope of discrepancy between Pr and Pr′ and esp ensures that the measure β(x) Pr(x) is close to a probability distribution.

Data: 5 open source project, process metrics (change data)

performance indicators: AUC, F, Recall, Precision

Experiments:

parameter tuning: B=1,3,5,10,20,50; esp=0.01, 0.1, 0.5, 1, 5, 10.

Naive Bayes (B=5, esp=0.01)

KMM vs. non-weights

Observations:

1) after parameter tuning, KMM helps to improve prediction performance, but not so significant (2%)

2) long running time! 900 training instances + 300 test instances: 30min; 10,000 training instances + 300 test instance: 130 min!

Questions:

1) KMM may be a stupid idea for cross defect prediction though it's beautiful on mathematics?

New results(2012-12-18):

parameter tuning: B=1,3,5,10,20,50; esp=0.01, 0.1, 0.5, 1, 5, 10.

Data: 5 open source project, process metrics (change data)

performance indicators: AUC, F, Recall, Precision

Experiments:

parameter tuning: B=1,3,5,10,20,50; esp=0.01, 0.1, 0.5, 1, 5, 10.

Naive Bayes (B=5, esp=0.01)

| AUC | Acc | F | F_2 | Recall | Precision | PF | TestSet | TrainSet |

| 0.6749 | 0.8517 | 0.2238 | 0.1752 | 0.1531 | 0.4156 | 0.0349 | PDE_change | JDT_change |

| 0.6731 | 0.6451 | 0.2282 | 0.1586 | 0.1318 | 0.8500 | 0.0154 | equinox_change | JDT_change |

| 0.7555 | 0.9204 | 0.3529 | 0.2708 | 0.2344 | 0.7143 | 0.0096 | lucene_change | JDT_change |

| 0.6424 | 0.8598 | 0.1092 | 0.0778 | 0.0653 | 0.3333 | 0.0198 | mylyn_change | JDT_change |

| 0.7185 | 0.6229 | 0.4551 | 0.6002 | 0.7621 | 0.3244 | 0.4134 | JDT_change | PDE_change |

| 0.7953 | 0.6944 | 0.4343 | 0.3381 | 0.2946 | 0.8261 | 0.0410 | equinox_change | PDE_change |

| 0.7772 | 0.8857 | 0.4060 | 0.4154 | 0.4219 | 0.3913 | 0.0670 | lucene_change | PDE_change |

| 0.5753 | 0.8448 | 0.2684 | 0.2345 | 0.2163 | 0.3533 | 0.0600 | mylyn_change | PDE_change |

| 0.7488 | 0.6810 | 0.4838 | 0.6037 | 0.7233 | 0.3634 | 0.3300 | JDT_change | equinox_change |

| 0.6985 | 0.7508 | 0.3935 | 0.4871 | 0.5789 | 0.2980 | 0.2213 | PDE_change | equinox_change |

| 0.7118 | 0.7424 | 0.3101 | 0.4444 | 0.6250 | 0.2062 | 0.2456 | lucene_change | equinox_change |

| 0.5615 | 0.7873 | 0.2826 | 0.3030 | 0.3184 | 0.2541 | 0.1416 | mylyn_change | equinox_change |

| 0.7411 | 0.6911 | 0.4901 | 0.6056 | 0.7184 | 0.3719 | 0.3161 | JDT_change | lucene_change |

| 0.6889 | 0.7916 | 0.3735 | 0.4133 | 0.4450 | 0.3218 | 0.1522 | PDE_change | lucene_change |

| 0.7825 | 0.7222 | 0.5588 | 0.4822 | 0.4419 | 0.7600 | 0.0923 | equinox_change | lucene_change |

| 0.6489 | 0.8281 | 0.3043 | 0.2929 | 0.2857 | 0.3256 | 0.0897 | mylyn_change | lucene_change |

| 0.6040 | 0.3400 | 0.3286 | 0.5038 | 0.7816 | 0.2080 | 0.7750 | JDT_change | mylyn_change |

| 0.5824 | 0.5311 | 0.2626 | 0.3958 | 0.5981 | 0.1682 | 0.4798 | PDE_change | mylyn_change |

| 0.5599 | 0.6636 | 0.5068 | 0.4605 | 0.4341 | 0.6087 | 0.1846 | equinox_change | mylyn_change |

| 0.6228 | 0.7829 | 0.2857 | 0.3731 | 0.4688 | 0.2055 | 0.1850 | lucene_change | mylyn_change |

KMM vs. non-weights

| B=5 esp=0.01 | non-weights | |||

| AUC | AUC | improvement | mean(imp) | median(imp) |

| 0.6749 | 0.6313 | 0.0436 | 0.0218 | 0.0228 |

| 0.6731 | 0.7702 | -0.0970 | ||

| 0.7555 | 0.7149 | 0.0406 | ||

| 0.6424 | 0.4153 | 0.2271 | ||

| 0.7185 | 0.7207 | -0.0022 | ||

| 0.7953 | 0.7843 | 0.0110 | ||

| 0.7772 | 0.7413 | 0.0359 | ||

| 0.5753 | 0.5716 | 0.0038 | ||

| 0.7488 | 0.7105 | 0.0384 | ||

| 0.6985 | 0.6843 | 0.0142 | ||

| 0.7118 | 0.7257 | -0.0139 | ||

| 0.5615 | 0.5131 | 0.0484 | ||

| 0.7411 | 0.7098 | 0.0313 | ||

| 0.6889 | 0.6954 | -0.0065 | ||

| 0.7825 | 0.7739 | 0.0086 | ||

| 0.6489 | 0.6140 | 0.0349 | ||

| 0.6040 | 0.6305 | -0.0265 | ||

| 0.5824 | 0.6424 | -0.0601 | ||

| 0.5599 | 0.5102 | 0.0497 | ||

| 0.6228 | 0.5678 | 0.0550 |

Observations:

1) after parameter tuning, KMM helps to improve prediction performance, but not so significant (2%)

2) long running time! 900 training instances + 300 test instances: 30min; 10,000 training instances + 300 test instance: 130 min!

Questions:

1) KMM may be a stupid idea for cross defect prediction though it's beautiful on mathematics?

New results(2012-12-18):

parameter tuning: B=1,3,5,10,20,50; esp=0.01, 0.1, 0.5, 1, 5, 10.

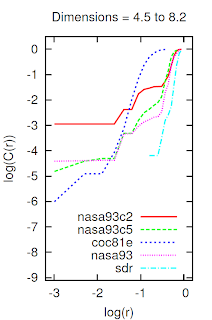

Fig. 1 Improvement of PD

Fig. 2. Improvement of PF

Subscribe to:

Posts (Atom)