Algorithm: Kernel Mean Match

where B limits the scope of discrepancy between Pr and Pr′ and esp ensures that the measure β(x) Pr(x) is close to a probability distribution.

Data: 5 open source project, process metrics (change data)

performance indicators: AUC, F, Recall, Precision

Experiments:

parameter tuning: B=1,3,5,10,20,50; esp=0.01, 0.1, 0.5, 1, 5, 10.

Naive Bayes (B=5, esp=0.01)

KMM vs. non-weights

Observations:

1) after parameter tuning, KMM helps to improve prediction performance, but not so significant (2%)

2) long running time! 900 training instances + 300 test instances: 30min; 10,000 training instances + 300 test instance: 130 min!

Questions:

1) KMM may be a stupid idea for cross defect prediction though it's beautiful on mathematics?

New results(2012-12-18):

parameter tuning: B=1,3,5,10,20,50; esp=0.01, 0.1, 0.5, 1, 5, 10.

Data: 5 open source project, process metrics (change data)

performance indicators: AUC, F, Recall, Precision

Experiments:

parameter tuning: B=1,3,5,10,20,50; esp=0.01, 0.1, 0.5, 1, 5, 10.

Naive Bayes (B=5, esp=0.01)

| AUC | Acc | F | F_2 | Recall | Precision | PF | TestSet | TrainSet |

| 0.6749 | 0.8517 | 0.2238 | 0.1752 | 0.1531 | 0.4156 | 0.0349 | PDE_change | JDT_change |

| 0.6731 | 0.6451 | 0.2282 | 0.1586 | 0.1318 | 0.8500 | 0.0154 | equinox_change | JDT_change |

| 0.7555 | 0.9204 | 0.3529 | 0.2708 | 0.2344 | 0.7143 | 0.0096 | lucene_change | JDT_change |

| 0.6424 | 0.8598 | 0.1092 | 0.0778 | 0.0653 | 0.3333 | 0.0198 | mylyn_change | JDT_change |

| 0.7185 | 0.6229 | 0.4551 | 0.6002 | 0.7621 | 0.3244 | 0.4134 | JDT_change | PDE_change |

| 0.7953 | 0.6944 | 0.4343 | 0.3381 | 0.2946 | 0.8261 | 0.0410 | equinox_change | PDE_change |

| 0.7772 | 0.8857 | 0.4060 | 0.4154 | 0.4219 | 0.3913 | 0.0670 | lucene_change | PDE_change |

| 0.5753 | 0.8448 | 0.2684 | 0.2345 | 0.2163 | 0.3533 | 0.0600 | mylyn_change | PDE_change |

| 0.7488 | 0.6810 | 0.4838 | 0.6037 | 0.7233 | 0.3634 | 0.3300 | JDT_change | equinox_change |

| 0.6985 | 0.7508 | 0.3935 | 0.4871 | 0.5789 | 0.2980 | 0.2213 | PDE_change | equinox_change |

| 0.7118 | 0.7424 | 0.3101 | 0.4444 | 0.6250 | 0.2062 | 0.2456 | lucene_change | equinox_change |

| 0.5615 | 0.7873 | 0.2826 | 0.3030 | 0.3184 | 0.2541 | 0.1416 | mylyn_change | equinox_change |

| 0.7411 | 0.6911 | 0.4901 | 0.6056 | 0.7184 | 0.3719 | 0.3161 | JDT_change | lucene_change |

| 0.6889 | 0.7916 | 0.3735 | 0.4133 | 0.4450 | 0.3218 | 0.1522 | PDE_change | lucene_change |

| 0.7825 | 0.7222 | 0.5588 | 0.4822 | 0.4419 | 0.7600 | 0.0923 | equinox_change | lucene_change |

| 0.6489 | 0.8281 | 0.3043 | 0.2929 | 0.2857 | 0.3256 | 0.0897 | mylyn_change | lucene_change |

| 0.6040 | 0.3400 | 0.3286 | 0.5038 | 0.7816 | 0.2080 | 0.7750 | JDT_change | mylyn_change |

| 0.5824 | 0.5311 | 0.2626 | 0.3958 | 0.5981 | 0.1682 | 0.4798 | PDE_change | mylyn_change |

| 0.5599 | 0.6636 | 0.5068 | 0.4605 | 0.4341 | 0.6087 | 0.1846 | equinox_change | mylyn_change |

| 0.6228 | 0.7829 | 0.2857 | 0.3731 | 0.4688 | 0.2055 | 0.1850 | lucene_change | mylyn_change |

KMM vs. non-weights

| B=5 esp=0.01 | non-weights | |||

| AUC | AUC | improvement | mean(imp) | median(imp) |

| 0.6749 | 0.6313 | 0.0436 | 0.0218 | 0.0228 |

| 0.6731 | 0.7702 | -0.0970 | ||

| 0.7555 | 0.7149 | 0.0406 | ||

| 0.6424 | 0.4153 | 0.2271 | ||

| 0.7185 | 0.7207 | -0.0022 | ||

| 0.7953 | 0.7843 | 0.0110 | ||

| 0.7772 | 0.7413 | 0.0359 | ||

| 0.5753 | 0.5716 | 0.0038 | ||

| 0.7488 | 0.7105 | 0.0384 | ||

| 0.6985 | 0.6843 | 0.0142 | ||

| 0.7118 | 0.7257 | -0.0139 | ||

| 0.5615 | 0.5131 | 0.0484 | ||

| 0.7411 | 0.7098 | 0.0313 | ||

| 0.6889 | 0.6954 | -0.0065 | ||

| 0.7825 | 0.7739 | 0.0086 | ||

| 0.6489 | 0.6140 | 0.0349 | ||

| 0.6040 | 0.6305 | -0.0265 | ||

| 0.5824 | 0.6424 | -0.0601 | ||

| 0.5599 | 0.5102 | 0.0497 | ||

| 0.6228 | 0.5678 | 0.0550 |

Observations:

1) after parameter tuning, KMM helps to improve prediction performance, but not so significant (2%)

2) long running time! 900 training instances + 300 test instances: 30min; 10,000 training instances + 300 test instance: 130 min!

Questions:

1) KMM may be a stupid idea for cross defect prediction though it's beautiful on mathematics?

New results(2012-12-18):

parameter tuning: B=1,3,5,10,20,50; esp=0.01, 0.1, 0.5, 1, 5, 10.

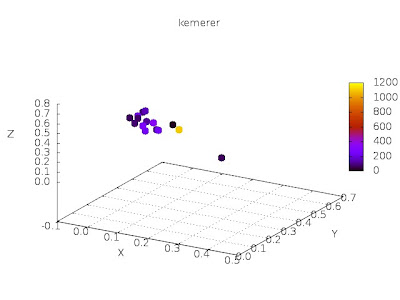

Fig. 1 Improvement of PD

Fig. 2. Improvement of PF